Computer vision

Contribution to RADA (Regularized Adversarial Domain Adaptation)

RADA [1] is a software developed by Lawrence Livermore National Laboratory (LLNL) for correcting the bias of climate models by generative adversarial network (GAN). During my summer internship at the LLNL in 2022, I made a significant contribution by discovering bugs in the code and creating a user-friendly manual to help researchers in the Lab utilize the code for various climate models.

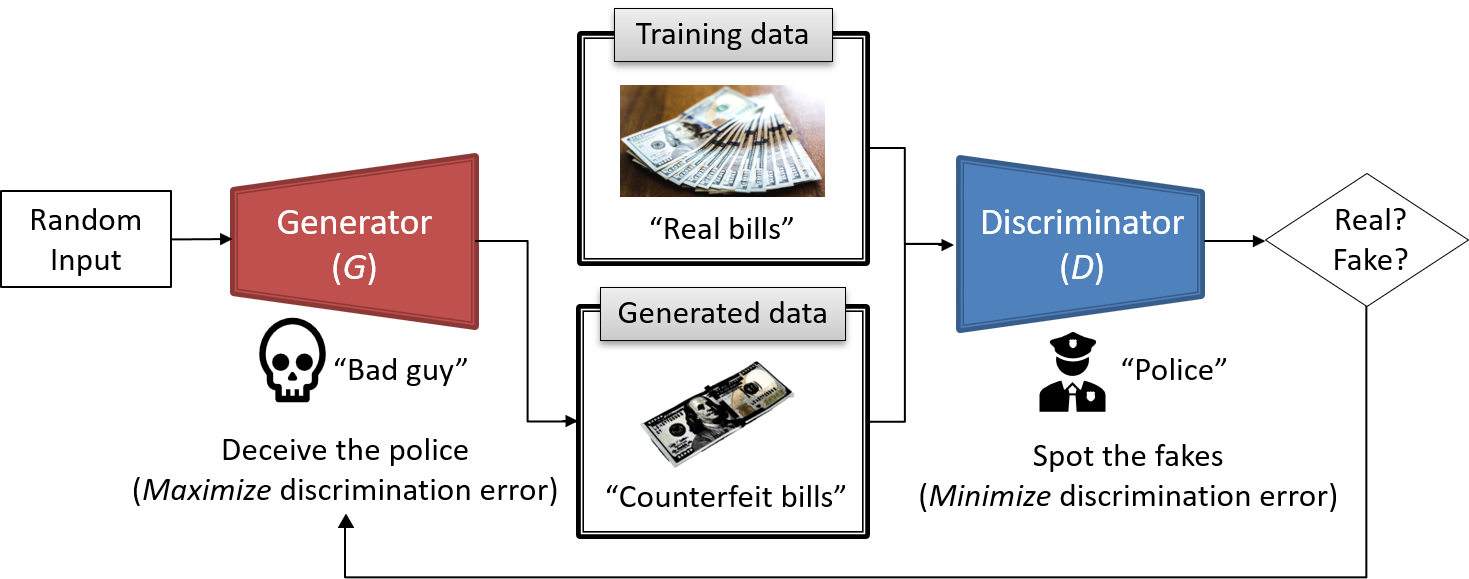

Generative adversarial network (GAN)

GAN is one of the most successful models to generate photorealistic images. GAN consists of two neural networks: a genrator and a discrminator. The generator is trained to generate images that are very similar to the ones in training dataset. Adversely, the disciminator is trained to classify whether a given image is real (the one from the dataset) or fake (the one generated by the generator). The two networks are trained simultaneously in a zero-sum game, where the generator tries to maximize discrimination error while the discriminator tries to minimize the error.

Domain adaptation by cycle GAN

The generator can be trained to learn a mapping from one domain (e.g., zebra images) to another (e.g., horse images). This is acheived by regularizing the generator to learn a forward mapping (e.g. zebras to horses) as well as an inverse mapping (e.g., horses to zerbras). This idea is called cycle GAN [2] and shows better performance than the traditional GAN.

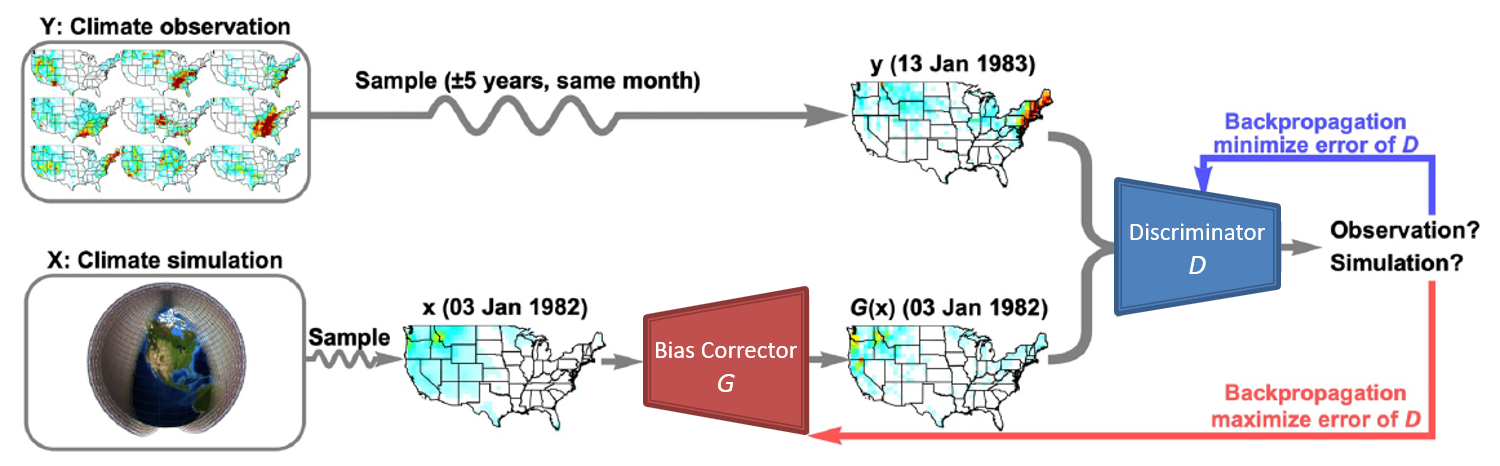

Bias correction of climate models by RADA

RADA uses the idea of cycle GAN to correct the biases of climate models. The generator is trained to correct the bias of a given simulation data to align more closely with the real climiate data. The discrminator classifies the real climate data and the bias-corrected simulation data as accurately as possible. The genenrator is regulized in terms of cyclc consistency and dynamical consistency.

Funding information

This internship project was performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under contract DE-AC52-07NA27344.Detection and Classification of Airplanes by SSD Algorithm

In 2021, I collaborated with a research team at Sandia National Laboratories on a project to detect and classify airplanes in overhead images for national security purposes. The following is a summary of my contributions to the project. Please note that the images provided are not the actual data used in the project.

Challenges in airplane detection and classification

There were several challenges in classifying airplanes as passenger/cargo planes, fighter jets, and small planes. Firstly, the airplanes in test dataset had a wide range of different attributes. Secondly, some images were affected by noise such as glint and blur, or partially obscured by cloud cover or the edge of the image. Finally, the training dataset was biased, as there was only a limited number of training samples available for military planes.

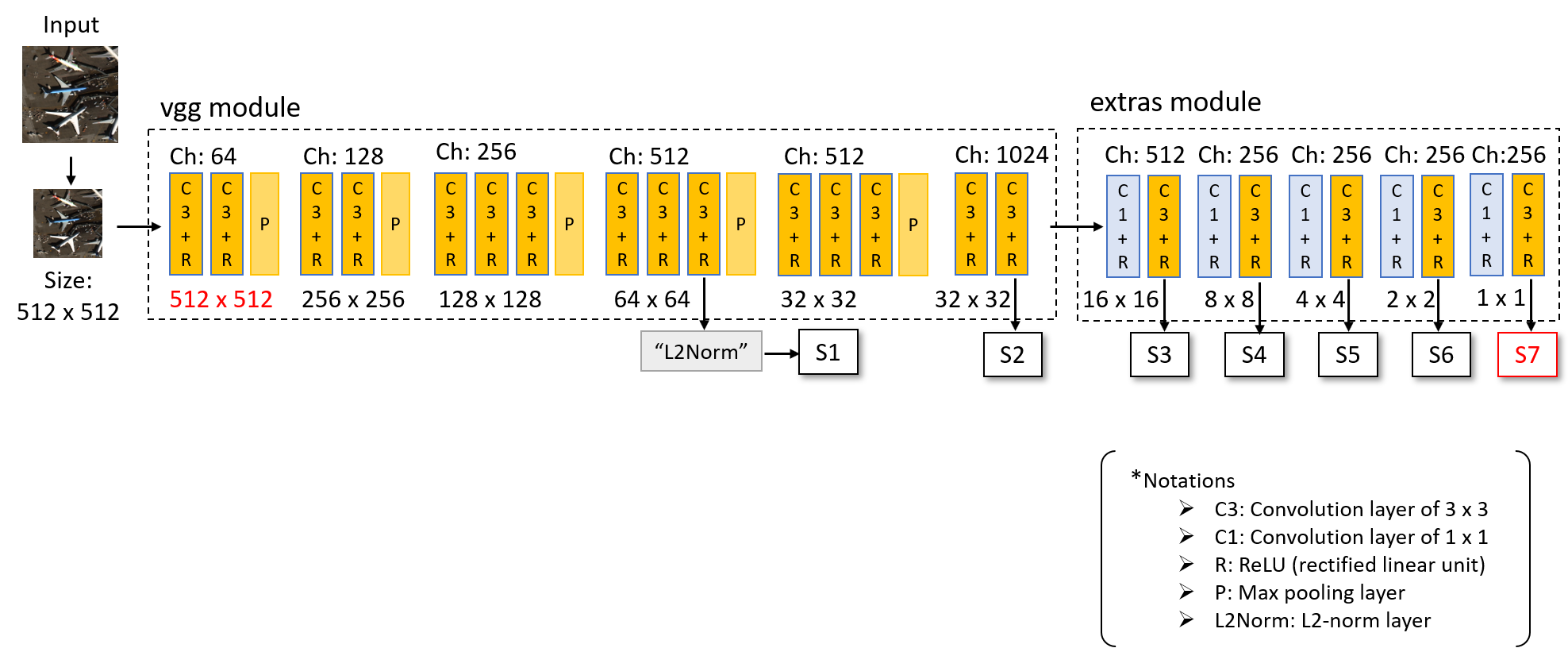

Single Shot Detector (SSD) algorithm

We used the SSD algorithm [4], a deep convolutional neural network, to detect and classify objects in a single shot. This network is trained to predict category scores and box offsets using convolutional filters. In order to improve accuracy, the network employs seven receptive fields, each of which is designed to target different sizes of objects. The SSD algorithm outperformed previous cutting-edge classifiers such as Faster R-CNN and YOLO.

However, the SSD algorithm still had difficulty detecting fighter jet images or those in snowy backgrounds. To address this issue, we fine-tuned the hyperparameters of the SSD algorithm and trained the network with a synthetic dataset. This allowed us to improve the algorithm's performance in detecting those types of planes.

Data augmentation

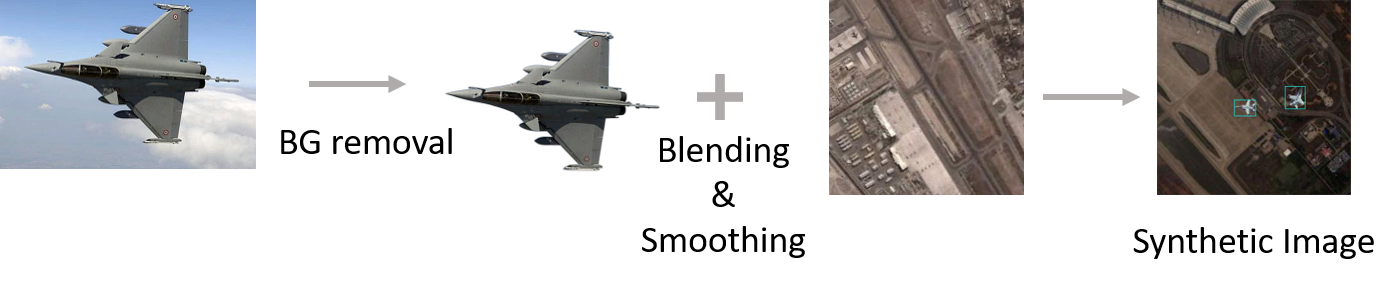

To generate a synthetic dataset of fighter jets, we followed these steps:

- We collected ~100 images of fighter jets from multiple datasets, and automatically removed their backgrounds. For each fither jet image, we rotated it and computed its bounding box based on the pixel values.

- We blended the fighter jets with overhead airport images. To improve the photorealism, we applied Poisson blending and a Gaussian blur filter to smooth the boundaries. We ensured that the fighter jets did not overlap by considering the pre-computed bounding boxes geometrically.

- We repeated this process to generate thousands of training samples. This approach resulted in an 8% improvement in the average precision of classification.